上文简单的了解了airflow的概念与使用场景,今天就通过Docker安装一下Airflow,在使用中在深入的了解一下airflow有哪些具体的功能。

1Airflow容器化部署

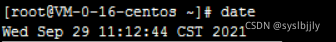

阿里云的宿主机环境:

- 操作系统: Ubuntu 20.04.3 LTS

- 内核版本: Linux 5.4.0-91-generic

安装docker

安装Docker可参考官方文档[1],纯净系统,就没必要卸载旧版本了,因为是云上平台,为防止配置搞坏环境,你可以先提前进行快照。

- #更新repo

- sudoapt-getupdate

- sudoapt-getinstall\\

- ca-certificates\\

- curl\\

- gnupg\\

- lsb-release

- #添加dockergpgkey

- curl-fsSLhttps://download.docker.com/linux/ubuntu/gpg|sudogpg–dearmor-o/usr/share/keyrings/docker-archive-keyring.gpg

- #设置dockerstable仓库地址

- echo\\

- "deb[arch=$(dpkg–print-architecture)signed-by=/usr/share/keyrings/docker-archive-keyring.gpg]https://download.docker.com/linux/ubuntu\\

- $(lsb_release-cs)stable"|sudotee/etc/apt/sources.list.d/docker.list>/dev/null

- #查看可安装的docker-ce版本

- root@bigdata1:~#apt-cachemadisondocker-ce

- docker-ce|5:20.10.12~3-0~ubuntu-focal|https://download.docker.com/linux/ubuntufocal/stableamd64Packages

- docker-ce|5:20.10.11~3-0~ubuntu-focal|https://download.docker.com/linux/ubuntufocal/stableamd64Packages

- docker-ce|5:20.10.10~3-0~ubuntu-focal|https://download.docker.com/linux/ubuntufocal/stableamd64Packages

- docker-ce|5:20.10.9~3-0~ubuntu-focal|https://download.docker.com/linux/ubuntufocal/stableamd64Packages

- #安装命令格式

- #sudoapt-getinstalldocker-ce=<VERSION_STRING>docker-ce-cli=<VERSION_STRING>containerd.io

- #安装指定版本

- sudoapt-getinstalldocker-ce=5:20.10.12~3-0~ubuntu-focaldocker-ce-cli=5:20.10.12~3-0~ubuntu-focalcontainerd.io

优化Docker配置

- {

- "data-root":"/var/lib/docker",

- "exec-opts":[

- "native.cgroupdriver=systemd"

- ],

- "registry-mirrors":[

- "https://****.mirror.aliyuncs.com"#此处配置一些加速的地址,比如阿里云的等等…

- ],

- "storage-driver":"overlay2",

- "storage-opts":[

- "overlay2.override_kernel_check=true"

- ],

- "log-driver":"json-file",

- "log-opts":{

- "max-size":"100m",

- "max-file":"3"

- }

- }

配置开机自己

- systemctldaemon-reload

- systemctlenable–nowdocker.service

容器化安装Airflow

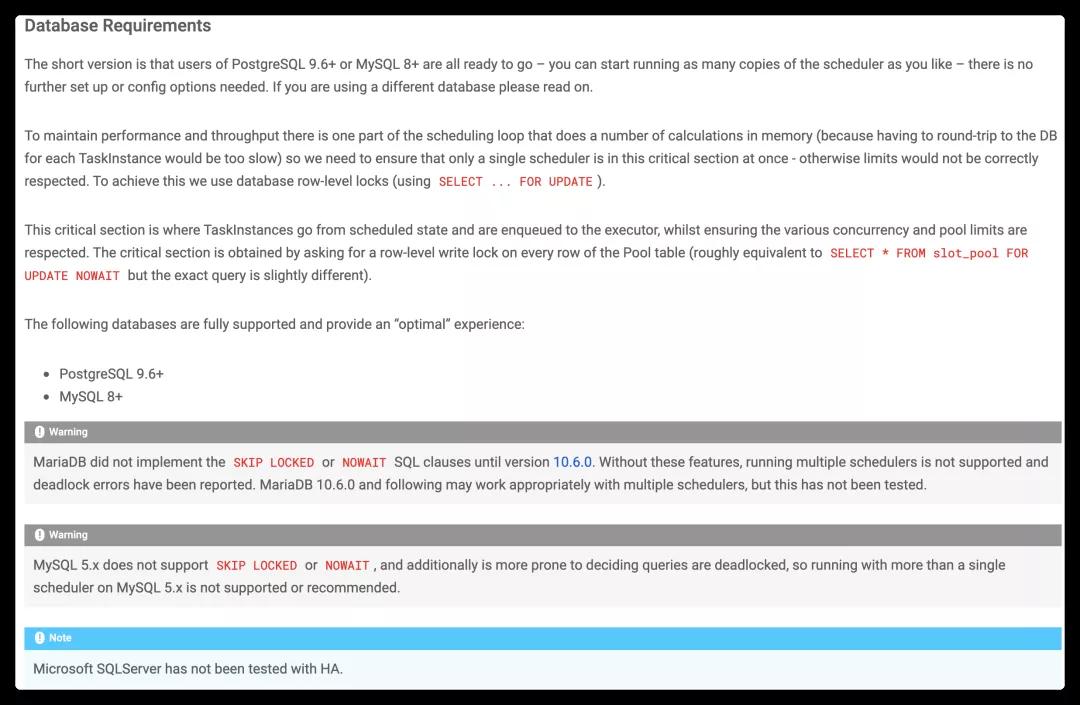

数据库选型

根据官网的说明,数据库建议使用MySQL8+和postgresql 9.6+,在官方的docker-compose脚本[2]中使用是PostgreSQL,因此我们需要调整一下docker-compose.yml的内容

- —

- version:'3'

- x-airflow-common:

- &airflow-common

- #Inordertoaddcustomdependenciesorupgradeproviderpackagesyoucanuseyourextendedimage.

- #Commenttheimageline,placeyourDockerfileinthedirectorywhereyouplacedthedocker-compose.yaml

- #anduncommentthe"build"linebelow,Thenrun`docker-composebuild`tobuildtheimages.

- image:${AIRFLOW_IMAGE_NAME:-apache/airflow:2.2.3}

- #build:.

- environment:

- &airflow-common-env

- AIRFLOW__CORE__EXECUTOR:CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN:mysql+mysqldb://airflow:aaaa@mysql/airflow#此处替换为mysql连接方式

- AIRFLOW__CELERY__RESULT_BACKEND:db+mysql://airflow:aaaa@mysql/airflow#此处替换为mysql连接方式

- AIRFLOW__CELERY__BROKER_URL:redis://:xxxx@redis:6379/0#为保证安全,我们对redis开启了认证,因此将此处xxxx替换为redis密码

- AIRFLOW__CORE__FERNET_KEY:''

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION:'true'

- AIRFLOW__CORE__LOAD_EXAMPLES:'true'

- AIRFLOW__API__AUTH_BACKEND:'airflow.api.auth.backend.basic_auth'

- _PIP_ADDITIONAL_REQUIREMENTS:${_PIP_ADDITIONAL_REQUIREMENTS:-}

- volumes:

- -./dags:/opt/airflow/dags

- -./logs:/opt/airflow/logs

- -./plugins:/opt/airflow/plugins

- user:"${AIRFLOW_UID:-50000}:0"

- depends_on:

- &airflow-common-depends-on

- redis:

- condition:service_healthy

- mysql:#此处修改为mysqlservice名称

- condition:service_healthy

- services:

- mysql:

- image:mysql:8.0.27#修改为mysql最新版镜像

- environment:

- MYSQL_ROOT_PASSWORD:bbbb#MySQLroot账号密码

- MYSQL_USER:airflow

- MYSQL_PASSWORD:aaaa#airflow用户的密码

- MYSQL_DATABASE:airflow

- command:

- –default-authentication-plugin=mysql_native_password#指定默认的认证插件

- –collation-server=utf8mb4_general_ci#依据官方指定字符集

- –character-set-server=utf8mb4#依据官方指定字符编码

- volumes:

- -/apps/airflow/mysqldata8:/var/lib/mysql#持久化MySQL数据

- -/apps/airflow/my.cnf:/etc/my.cnf#持久化MySQL配置文件

- healthcheck:

- test:mysql–user=$$MYSQL_USER–password=$$MYSQL_PASSWORD-e'SHOWDATABASES;'#healthcheckcommand

- interval:5s

- retries:5

- restart:always

- redis:

- image:redis:6.2

- expose:

- -6379

- command:redis-server–requirepassxxxx#redis-server开启密码认证

- healthcheck:

- test:["CMD","redis-cli","-a","xxxx","ping"]#redis使用密码进行healthcheck

- interval:5s

- timeout:30s

- retries:50

- restart:always

- airflow-webserver:

- <<:*airflow-common

- command:webserver

- ports:

- -8080:8080

- healthcheck:

- test:["CMD","curl","–fail","http://localhost:8080/health"]

- interval:10s

- timeout:10s

- retries:5

- restart:always

- depends_on:

- <<:*airflow-common-depends-on

- airflow-init:

- condition:service_completed_successfully

- airflow-scheduler:

- <<:*airflow-common

- command:scheduler

- healthcheck:

- test:["CMD-SHELL",'airflowjobscheck–job-typeSchedulerJob–hostname"$${HOSTNAME}"']

- interval:10s

- timeout:10s

- retries:5

- restart:always

- depends_on:

- <<:*airflow-common-depends-on

- airflow-init:

- condition:service_completed_successfully

- airflow-worker:

- <<:*airflow-common

- command:celeryworker

- healthcheck:

- test:

- –"CMD-SHELL"

- –'celery–appairflow.executors.celery_executor.appinspectping-d"celery@$${HOSTNAME}"'

- interval:10s

- timeout:10s

- retries:5

- environment:

- <<:*airflow-common-env

- #Requiredtohandlewarmshutdownoftheceleryworkersproperly

- #Seehttps://airflow.apache.org/docs/docker-stack/entrypoint.html#signal-propagation

- DUMB_INIT_SETSID:"0"

- restart:always

- depends_on:

- <<:*airflow-common-depends-on

- airflow-init:

- condition:service_completed_successfully

- airflow-triggerer:

- <<:*airflow-common

- command:triggerer

- healthcheck:

- test:["CMD-SHELL",'airflowjobscheck–job-typeTriggererJob–hostname"$${HOSTNAME}"']

- interval:10s

- timeout:10s

- retries:5

- restart:always

- depends_on:

- <<:*airflow-common-depends-on

- airflow-init:

- condition:service_completed_successfully

- airflow-init:

- <<:*airflow-common

- entrypoint:/bin/bash

- #yamllintdisablerule:line-length

- command:

- –c

- -|

- functionver(){

- printf"%04d%04d%04d%04d"$${1//./}

- }

- airflow_version=$$(gosuairflowairflowversion)

- airflow_version_comparable=$$(ver$${airflow_version})

- min_airflow_version=2.2.0

- min_airflow_version_comparable=$$(ver$${min_airflow_version})

- if((airflow_version_comparable<min_airflow_version_comparable));then

- echo

- echo-e"\\033[1;31mERROR!!!:ToooldAirflowversion$${airflow_version}!\\e[0m"

- echo"TheminimumAirflowversionsupported:$${min_airflow_version}.Onlyusethisorhigher!"

- echo

- exit1

- fi

- if[[-z"${AIRFLOW_UID}"]];then

- echo

- echo-e"\\033[1;33mWARNING!!!:AIRFLOW_UIDnotset!\\e[0m"

- echo"IfyouareonLinux,youSHOULDfollowtheinstructionsbelowtoset"

- echo"AIRFLOW_UIDenvironmentvariable,otherwisefileswillbeownedbyroot."

- echo"Forotheroperatingsystemsyoucangetridofthewarningwithmanuallycreated.envfile:"

- echo"See:https://airflow.apache.org/docs/apache-airflow/stable/start/docker.html#setting-the-right-airflow-user"

- echo

- fi

- one_meg=1048576

- mem_available=$$(($$(getconf_PHYS_PAGES)*$$(getconfPAGE_SIZE)/one_meg))

- cpus_available=$$(grep-cE'cpu[0-9]+'/proc/stat)

- disk_available=$$(df/|tail-1|awk'{print$$4}')

- warning_resources="false"

- if((mem_available<4000));then

- echo

- echo-e"\\033[1;33mWARNING!!!:NotenoughmemoryavailableforDocker.\\e[0m"

- echo"Atleast4GBofmemoryrequired.Youhave$$(numfmt–toiec$$((mem_available*one_meg)))"

- echo

- warning_resources="true"

- fi

- if((cpus_available<2));then

- echo

- echo-e"\\033[1;33mWARNING!!!:NotenoughCPUSavailableforDocker.\\e[0m"

- echo"Atleast2CPUsrecommended.Youhave$${cpus_available}"

- echo

- warning_resources="true"

- fi

- if((disk_available<one_meg*10));then

- echo

- echo-e"\\033[1;33mWARNING!!!:NotenoughDiskspaceavailableforDocker.\\e[0m"

- echo"Atleast10GBsrecommended.Youhave$$(numfmt–toiec$$((disk_available*1024)))"

- echo

- warning_resources="true"

- fi

- if[[$${warning_resources}=="true"]];then

- echo

- echo-e"\\033[1;33mWARNING!!!:YouhavenotenoughresourcestorunAirflow(seeabove)!\\e[0m"

- echo"Pleasefollowtheinstructionstoincreaseamountofresourcesavailable:"

- echo"https://airflow.apache.org/docs/apache-airflow/stable/start/docker.html#before-you-begin"

- echo

- fi

- mkdir-p/sources/logs/sources/dags/sources/plugins

- chown-R"${AIRFLOW_UID}:0"/sources/{logs,dags,plugins}

- exec/entrypointairflowversion

- #yamllintenablerule:line-length

- environment:

- <<:*airflow-common-env

- _AIRFLOW_DB_UPGRADE:'true'

- _AIRFLOW_WWW_USER_CREATE:'true'

- _AIRFLOW_WWW_USER_USERNAME:${_AIRFLOW_WWW_USER_USERNAME:-airflow}

- _AIRFLOW_WWW_USER_PASSWORD:${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

- user:"0:0"

- volumes:

- -.:/sources

- airflow-cli:

- <<:*airflow-common

- profiles:

- -debug

- environment:

- <<:*airflow-common-env

- CONNECTION_CHECK_MAX_COUNT:"0"

- #Workaroundforentrypointissue.See:https://github.com/apache/airflow/issues/16252

- command:

- -bash

- –c

- -airflow

- flower:

- <<:*airflow-common

- command:celeryflower

- ports:

- -5555:5555

- healthcheck:

- test:["CMD","curl","–fail","http://localhost:5555/"]

- interval:10s

- timeout:10s

- retries:5

- restart:always

- depends_on:

- <<:*airflow-common-depends-on

- airflow-init:

- condition:service_completed_successfully

在官方docker-compose.yaml基础上只修改了x-airflow-common,MySQL,Redis相关配置,接下来就应该启动容器了,在启动之前,需要创建几个持久化目录:

- mkdir-p./dags./logs./plugins

- echo-e"AIRFLOW_UID=$(id-u)">.env#注意,此处一定要保证AIRFLOW_UID是普通用户的UID,且保证此用户有创建这些持久化目录的权限

如果不是普通用户,在运行容器的时候,会报错,找不到airflow模块

- docker-composeupairflow-init#初始化数据库,以及创建表

- docker-composeup-d#创建airflow容器

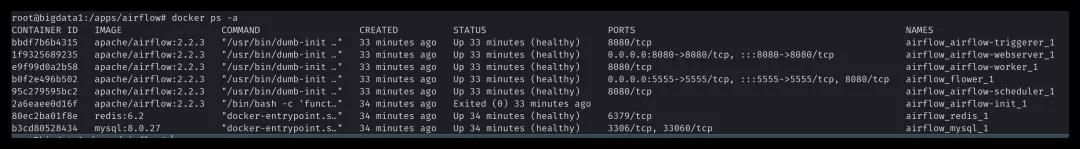

当出现容器的状态为unhealthy的时候,要通过docker inspect $container_name查看报错的原因,至此airflow的安装就已经完成了。

参考资料

[1]Install Docker Engine on Ubuntu: https://docs.docker.com/engine/install/ubuntu/

[2]官方docker-compose.yaml: https://airflow.apache.org/docs/apache-airflow/2.2.3/docker-compose.yaml

原文链接:https://mp.weixin.qq.com/s/VncpyXcTtlvnDkFrsAZ5lQ