本文实例为大家分享了java代码对hdfs进行增删改查操作的具体代码,供大家参考,具体内容如下

?

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

|

import java.io.file;

import java.io.fileoutputstream;

import java.io.ioexception;

import java.net.uri;

import org.apache.commons.compress.utils.ioutils;

import org.apache.hadoop.conf.configuration;

import org.apache.hadoop.fs.blocklocation;

import org.apache.hadoop.fs.fsdatainputstream;

import org.apache.hadoop.fs.fsdataoutputstream;

import org.apache.hadoop.fs.filestatus;

import org.apache.hadoop.fs.filesystem;

import org.apache.hadoop.fs.path;

public class fileopreation {

public static void main(string[] args) throws ioexception {

//createfile();

//deletefile();

//copyfiletohdfs();

//mkdirs();

//deldirs();

listdirectory();

download();

}

public static void createfile() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration =new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

byte[] file_content_buff="hello hadoop world, test write file !\\n".getbytes();

path dfs = new path("/home/test.txt");

fsdataoutputstream outputstream = fsystem.create(dfs);

outputstream.write(file_content_buff.length);

}

public fileopreation() {

// todo auto-generated constructor stub

}public static void deletefile() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration =new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

path deletf = new path("/home/test.txt");

boolean delresult = fsystem.delete(deletf,true);

system.out.println(delresult==true?"删除成功":"删除失败");

}

public static void copyfiletohdfs() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration =new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

path src = new path("e:\\\\serializationtest\\\\apitest.txt");

path dest_src = new path("/home");

fsystem.copyfromlocalfile(src, dest_src);

}

public static void mkdirs() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration =new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

path src = new path("/test");

fsystem.mkdirs(src);

}

public static void deldirs() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration = new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

path src = new path("/test");

fsystem.delete(src);

}

public static void listdirectory() throws ioexception {

string uri = "hdfs://alvis:9000";

configuration configuration = new configuration();

filesystem fsystem = filesystem.get(uri.create(uri), configuration);

filestatus[] fstatus = fsystem.liststatus(new path("/output"));

for(filestatus status : fstatus)

if (status.isfile()) {

system.out.println("文件路径:"+status.getpath().tostring());

system.out.println("文件路径 getreplication:"+status.getreplication());

system.out.println("文件路径 getblocksize:"+status.getblocksize());

blocklocation[] blocklocations = fsystem.getfileblocklocations(status, 0, status.getblocksize());

for(blocklocation location : blocklocations){

system.out.println("主机名:"+location.gethosts()[0]);

system.out.println("主机名:"+location.getnames()[0]);

}

}

else {

system.out.println("directory:"+status.getpath().tostring());

}

}

public static void download() throws ioexception {

configuration configuration = new configuration();

configuration.set("fs.defaultfs", "hdfs://alvis:9000");

filesystem fsystem =filesystem.get(configuration);

fsdatainputstream inputstream =fsystem.open( new path("/input/wc.jar"));

fileoutputstream outputstream = new fileoutputstream(new file("e:\\\\learnlife\\\\download\\\\wc.jar"));

ioutils.copy(inputstream, outputstream);

system.out.println("下载成功!");

}

}

|

思想:

一、定义虚拟机接口

二、先拿到hdfs远程调用接口对象configuration

三、定义分布式文件系统filesystem对象获取对象

四、给定路径

五、用filesystem对象调用操作

以上所述是小编给大家介绍的java代码对hdfs进行增删改查操作详解整合,希望对大家有所帮助,如果大家有任何疑问请给我留言,小编会及时回复大家的。在此也非常感谢大家对快网idc网站的支持!

原文链接:https://blog.csdn.net/qq_41395106/article/details/89036014

相关文章

猜你喜欢

- 个人服务器网站搭建:如何选择合适的服务器提供商? 2025-06-10

- ASP.NET自助建站系统中如何实现多语言支持? 2025-06-10

- 64M VPS建站:如何选择最适合的网站建设平台? 2025-06-10

- ASP.NET本地开发时常见的配置错误及解决方法? 2025-06-10

- ASP.NET自助建站系统的数据库备份与恢复操作指南 2025-06-10

TA的动态

- 2025-07-10 怎样使用阿里云的安全工具进行服务器漏洞扫描和修复?

- 2025-07-10 怎样使用命令行工具优化Linux云服务器的Ping性能?

- 2025-07-10 怎样使用Xshell连接华为云服务器,实现高效远程管理?

- 2025-07-10 怎样利用云服务器D盘搭建稳定、高效的网站托管环境?

- 2025-07-10 怎样使用阿里云的安全组功能来增强服务器防火墙的安全性?

快网idc优惠网

QQ交流群

您的支持,是我们最大的动力!

热门文章

-

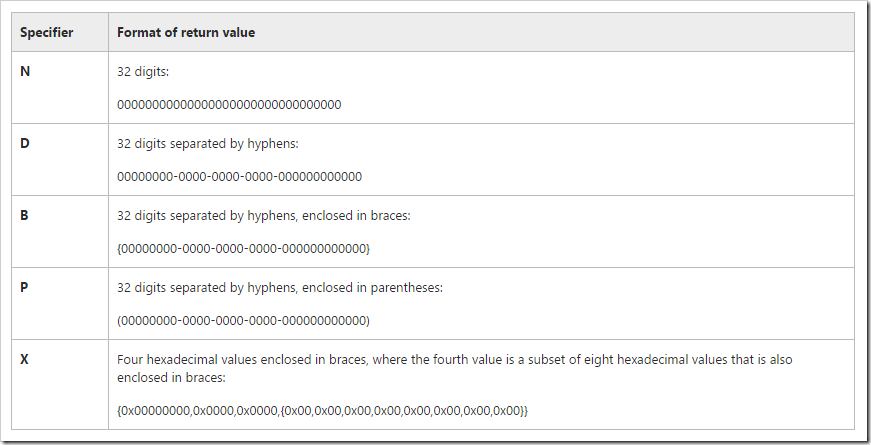

asp.net System.Guid ToString五种格式

2025-05-29 43 -

2025-05-27 65

-

2025-06-04 32

-

2025-05-29 50

-

2025-06-04 108

热门评论